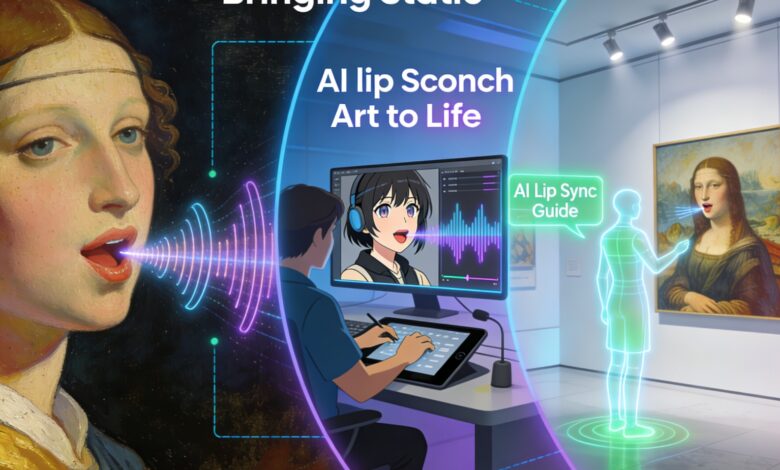

AI Lip Sync Guide: Bring Static Art to Life

We are living in a golden age of digital character creation. Whether you are an avid reader of manga, a dedicated gamer immersed in RPG lore, or a prompt engineer conjuring stunning portraits with Midjourney and Stable Diffusion, you likely have a gallery full of beloved characters. However, there has always been a distinct barrier between the creator and the creation: the image remains frozen. For years, fans and creators have dreamed of hearing their favorite static characters speak, but the technical barrier of traditional animation was simply too high. This is where the emergence of lip sync ai technology changes the game completely.

The desire to bring these characters to life is no longer a futuristic fantasy; it is an accessible reality. By utilizing advanced machine learning algorithms, modern tools can now analyze the audio of a voice recording and map it perfectly onto the face of a still image. This technology, broadly known as lip sync ai, bridges the gap between static art and dynamic storytelling. In this post, we will explore how you can take your manga panels, AI-generated portraits, and game screenshots and transform them into fully animated, talking videos that capture the imagination of your audience.

Why Use an AI Lip Sync Generator for Character Animation?

The transition from a static image to a talking video used to require a studio full of animators, expensive software like Live2D or After Effects, and weeks of work. Today, an AI lip sync generator democratizes this process, offering powerful advantages that appeal to casual fans and professional content creators alike.

unparalleled Speed and Efficiency

The most immediate benefit of using AI for this task is the sheer reduction in production time. Traditional lip-syncing involves “phoneme mapping,” where an animator must manually match specific mouth shapes to the sounds in the dialogue track. This is a painstaking, frame-by-frame process. An AI-powered generator automates this entirely. It analyzes the audio waveform and the facial geometry of the uploaded image simultaneously. Within minutes, or even seconds, the software renders a video where the mouth movements align perfectly with the speech. For content creators who need to post daily on platforms like TikTok or YouTube Shorts, this speed is not just a luxury; it is a necessity for staying relevant.

Breaking the Skill Barrier

Previously, animating a manga character or a custom game avatar required a deep understanding of rigging. You would need to cut the image into layers—separating the eyes, mouth, and hair—and then create a virtual skeleton. This is a steep learning curve that discourages most creative ideas before they even start. AI tools remove this barrier entirely. You do not need to know how to draw, rig, or keyframe. The algorithms are trained to recognize faces in various art styles, from the sharp lines of anime to the photorealistic textures of AI art. If you have an image and an audio file, you are qualified to create animation.

Breathing Life into “Silent” Media

There is a unique emotional connection that forms when a character speaks. For manga readers, characters often have a “voice” that exists only in the reader’s head. By using these tools, you can actualize that voice, creating “motion comics” that offer a completely new way to experience the story. Similarly, for AI-generated characters—which often feel like beautiful but empty shells—adding a voice and facial movement imbues them with personality and agency. It transforms a gallery of JPEGs into a cast of actors ready to perform, opening up narrative possibilities that static images simply cannot convey.

Top Scenarios for Animating Characters with Lip Sync AI

The versatility of lip sync AI tools means they can be applied across a vast spectrum of creative endeavors. From fan fiction to professional marketing, here is how you can utilize this technology to solve specific creative needs.

1. Creating Immersive “Motion Comics” and Manga Dubs

Manga and comic dubs are a massive genre on YouTube, where voice actors read the lines of comic panels. However, the visuals are usually static, relying on camera pans to create interest.

- The Workflow: You can elevate this format by taking the specific panel where a character is speaking, cropping the character, and running it through an AI generator with the voice actor’s audio.

- The Result: Instead of just hearing the line, the viewer sees the manga character actually delivering it. This increases viewer retention and makes the dramatic moments hit much harder. It allows creators to produce semi-animated series without the budget of an anime studio.

2. Bringing RPG and Game Avatars to Life

Games like Baldur’s Gate 3, Cyberpunk 2077, or Final Fantasy XIV allow players to create incredibly detailed custom characters. Often, players write extensive backstories for these avatars, but outside of the game, they are just screenshots.

- The Workflow: Take a high-resolution screenshot of your character’s face (neutral expression works best). Record a monologue or use an AI text-to-speech voice that fits their personality.

- The Result: You can create “video logs” or lore videos from your character’s perspective. This is excellent for Dungeons & Dragons players who want to introduce their character to the party, or for gaming YouTubers who want their avatar to host their video essays.

3. Giving a Voice to AI-Generated Art (Waifu/Husbando Culture)

The explosion of AI art generators has led to millions of users creating their ideal characters, often referred to as “Waifus” or “Husbandos.” Until now, these have been purely visual.

- The Workflow: Generate your ideal character using Midjourney or Stable Diffusion. Use a voice cloning tool to generate a unique voice that matches the visual aesthetic (e.g., a soft voice for an ethereal elf, a gruff voice for a cyberpunk mercenary). Sync them using the lip sync tool.

- The Result: You create a virtual companion that feels responsive. This is the foundation of the growing trend of AI influencers and virtual girlfriends/boyfriends, allowing for a level of interactivity that static images lack.

4. Interactive NPCs for Game Development

Indie game developers often struggle with the budget for full cinematic cutscenes.

- The Workflow: Instead of 3D modeling full facial animations, developers can use 2D sprites or concept art. By running dialogue lines through a lip sync generator, they can create talking heads for dialogue boxes.

- The Result: This provides a premium feel to visual novels or RPGs at a fraction of the cost. It allows players to see the emotion in the character’s delivery, not just read the text, enhancing immersion.

5. Social Media Storytelling and Memes

The viral nature of short-form content (Reels, TikTok) relies on grabbing attention quickly.

- The Workflow: Take a popular meme format or a trending anime character. Add a funny audio clip or a trending soundbite.

- The Result: An animated meme is significantly more engaging than a static one. Seeing a stoic character like Geralt of Rivia or a serious anime villain singing a pop song or reciting a funny copypasta utilizes the “uncanny valley” effect for humor, driving shares and engagement.

6. Educational Avatars and Mascots

Teachers and educational content creators can use anime or cartoon avatars to make learning less intimidating for younger audiences.

- The Workflow: Create a friendly mascot character. Record the lesson audio.

- The Result: A talking animated character holding the student’s attention is far more effective than a PowerPoint slide. It humanizes the digital classroom and can make complex topics feel more approachable through the use of a “guide” character.

Key Features of the Best AI Lip Sync Generator Tools

Not all tools are created equal. When looking for the right software to animate your manga or game characters, there are specific features you should look for to ensure high-quality output.

Advanced Facial Mesh and Micro-Expressions

A basic lip sync ai free tool might only move the mouth up and down, looking like a ventriloquist puppet. However, high-end generators use advanced facial meshing. This means the AI understands the structure of the face. It doesn’t just move the lips; it adjusts the jaw, twitches the nose slightly, and moves the cheeks to simulate muscles contracting. Crucially, the best tools also incorporate eye blinking and subtle head movements (nodding, tilting) that are procedurally generated. These “micro-movements” are essential for selling the illusion of life; without them, the character looks robotic and staring.

Multi-Language and Voice Cloning Compatibility

The global nature of manga and gaming means content often needs to cross language barriers. Top-tier generators are language-agnostic. They process the sound (phonemes), not just the text. This means you can take a Japanese anime character and make them speak English, French, or Spanish with perfect lip synchronization. Furthermore, the best platforms often integrate with Voice Cloning technology. This allows you to upload a 10-second sample of a voice and have the AI generate new dialogue in that exact timber, which can then be immediately lip-synced to the image.

Style Preservation and Art Consistency

One of the biggest challenges in animating 2D art is keeping the art style intact. Some older AI tools would warp the face, making an anime character look like a distorted real human. Modern AI lip sync generator algorithms are trained to respect the source material. They can distinguish between the flat shading of a cel-shaded game character, the ink lines of a manga, and the noise textures of a retro video game. The ability to animate the mouth without blurring the surrounding pixels or destroying the artistic intent of the original drawing is a critical feature to look for.

Customization and Emotion Control

While automatic syncing is great, creative control is better. The leading tools offer parameters to adjust the intensity of the animation. You might want an exaggerated, cartoony mouth movement for a comedic scene, or a subtle, constrained movement for a dramatic whisper. Some advanced interfaces allow you to select “emotions” (happy, sad, angry) which act as a filter over the lip sync, changing the shape of the eyebrows and the curvature of the mouth to match the sentiment of the audio, ensuring the visual acting matches the vocal performance.

Conclusion: The Future of Character Interaction is Here

The convergence of visual art and AI voice technology has opened a new frontier for digital storytelling. Whether you are a fan wanting to see your favorite manga panel in motion, a gamer looking to immortalize your avatar, or a creator building the next generation of virtual influencers, lip sync AI is the key that unlocks these possibilities. We are moving away from a passive consumption of static images toward an era of active, dynamic engagement with the characters we love. By mastering these tools today, you are not just animating a picture; you are breathing life into digital dreams.